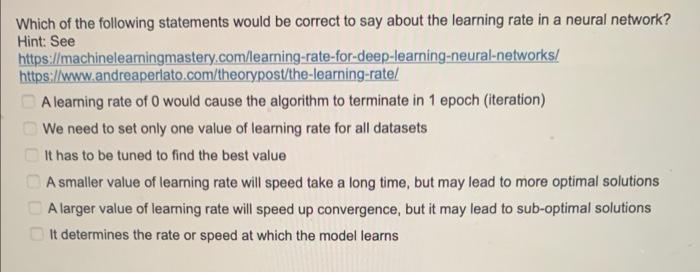

regularization machine learning mastery

Dropout regularization is a computationally cheap way to regularize a deep neural network. To put it simply it is a technique to prevent the machine learning model from overfitting by taking preventive.

Regularisation Techniques In Machine Learning And Deep Learning By Saurabh Singh Analytics Vidhya Medium

Data augmentation and early stopping.

. Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration. It is a technique to prevent the model from overfitting. The goal of regularization is to avoid overfitting by penalizing more complex models.

The general form of regularization involves adding an extra term to our cost function. Regularization is one of the most important concepts of machine learning. In this post you will discover the Dropout regularization technique and how to apply it.

Regularization is one of the techniques that is used to control overfitting in high flexibility models. The answer is regularization. In machine learning regularization is any modification made to a learning algorithm that is intended to reduce its generalization error.

Regularization in Machine Learning What is Regularization. This is an important theme in machine learning. Regularization can be splinted into two buckets.

Regularization is amongst one of the most crucial concepts of machine learning. Regularization can be implemented in. What is regularization.

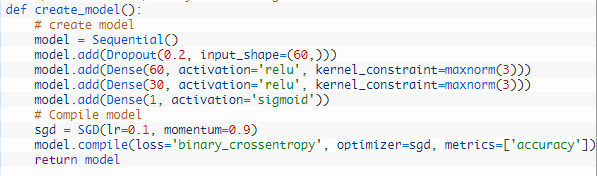

Dropout is a simple and powerful regularization technique for neural networks and deep learning models. Ad Browse Discover Thousands of Computers Internet Book Titles for Less. I have covered the entire concept in two parts.

It is also a. Regularization is one of the basic and most important concept in the world of Machine Learning. Part 1 deals with the theory.

So the systems are programmed to learn and improve from experience. Machine learning involves equipping computers to perform specific tasks without explicit instructions. How does Regularization Work.

So if we were using a. Dropout works by probabilistically removing or dropping out inputs to a layer which. Regularization works by adding a penalty or complexity term or shrinkage term with Residual Sum of Squares RSS to the complex model.

What Are The Main Regularization Methods Used In Machine Learning Quora

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

Regularization Techniques Regularization In Deep Learning

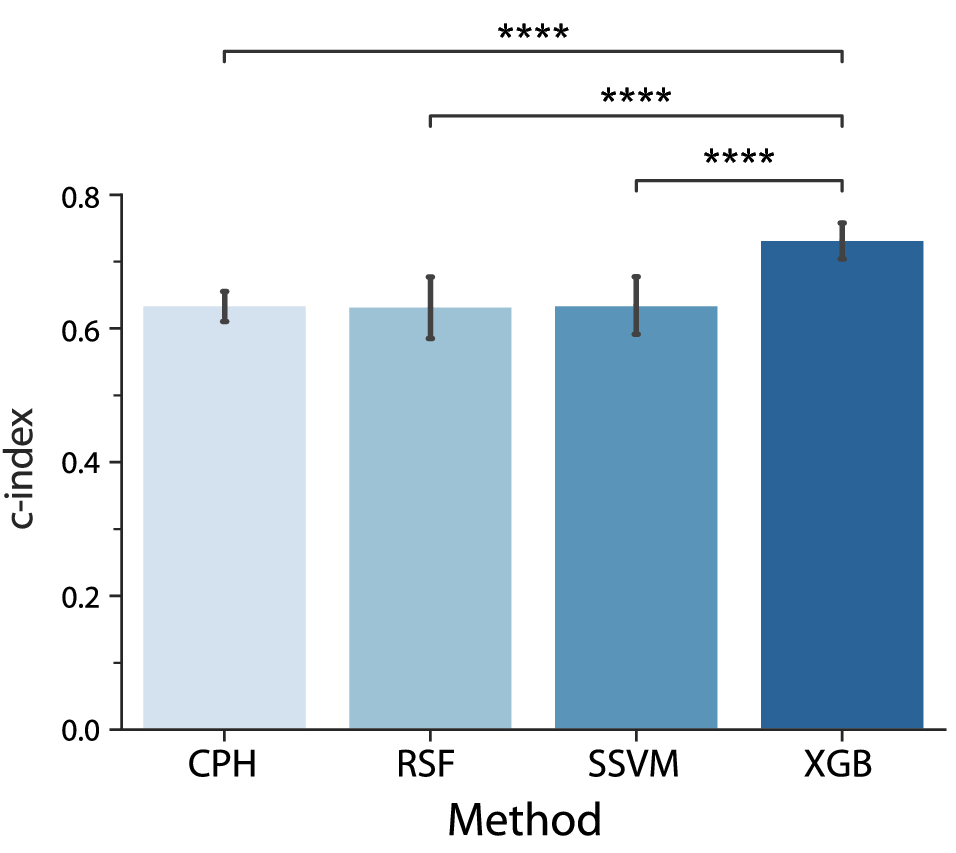

Explainable Machine Learning Can Outperform Cox Regression Predictions And Provide Insights In Breast Cancer Survival Scientific Reports

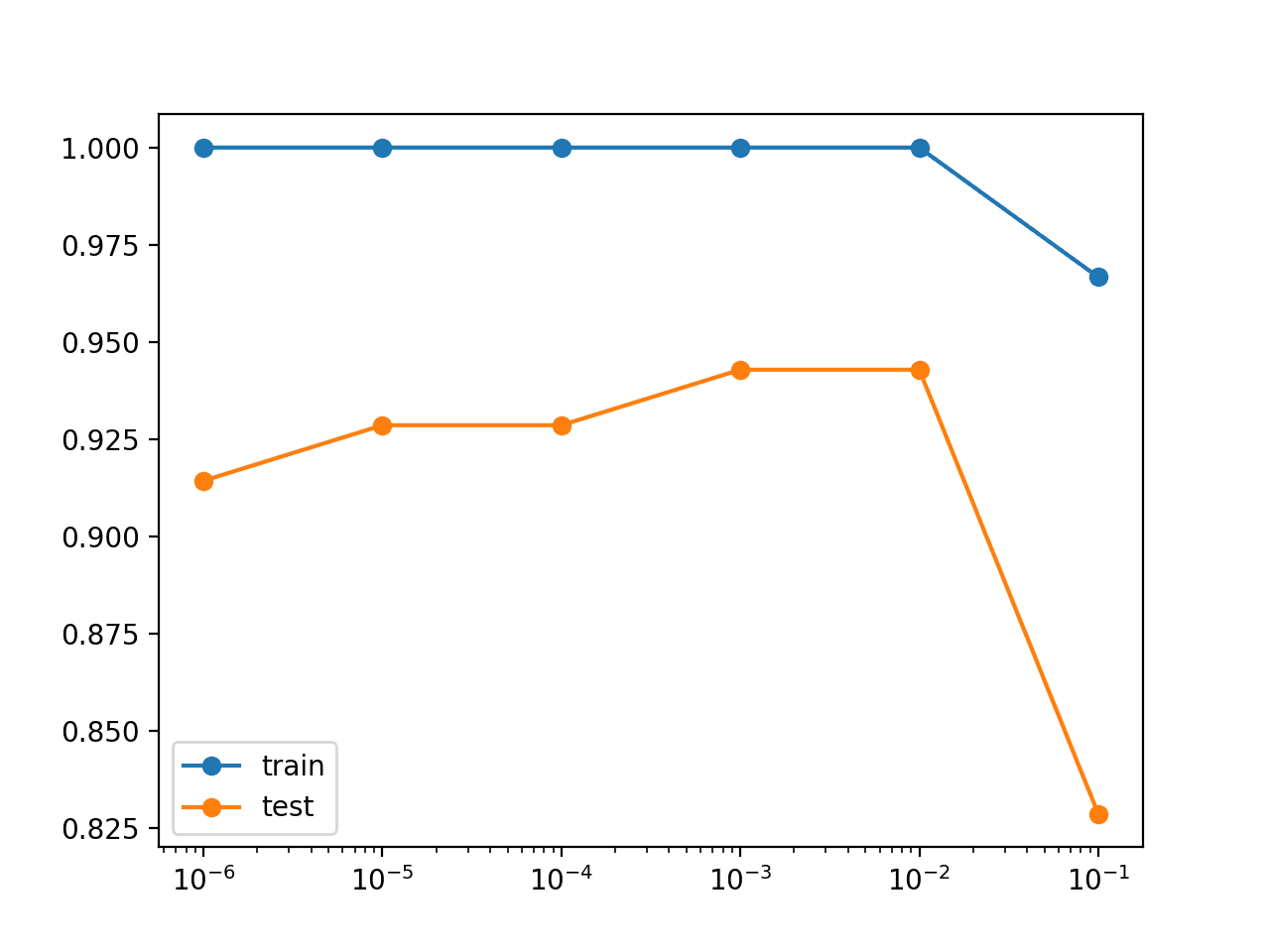

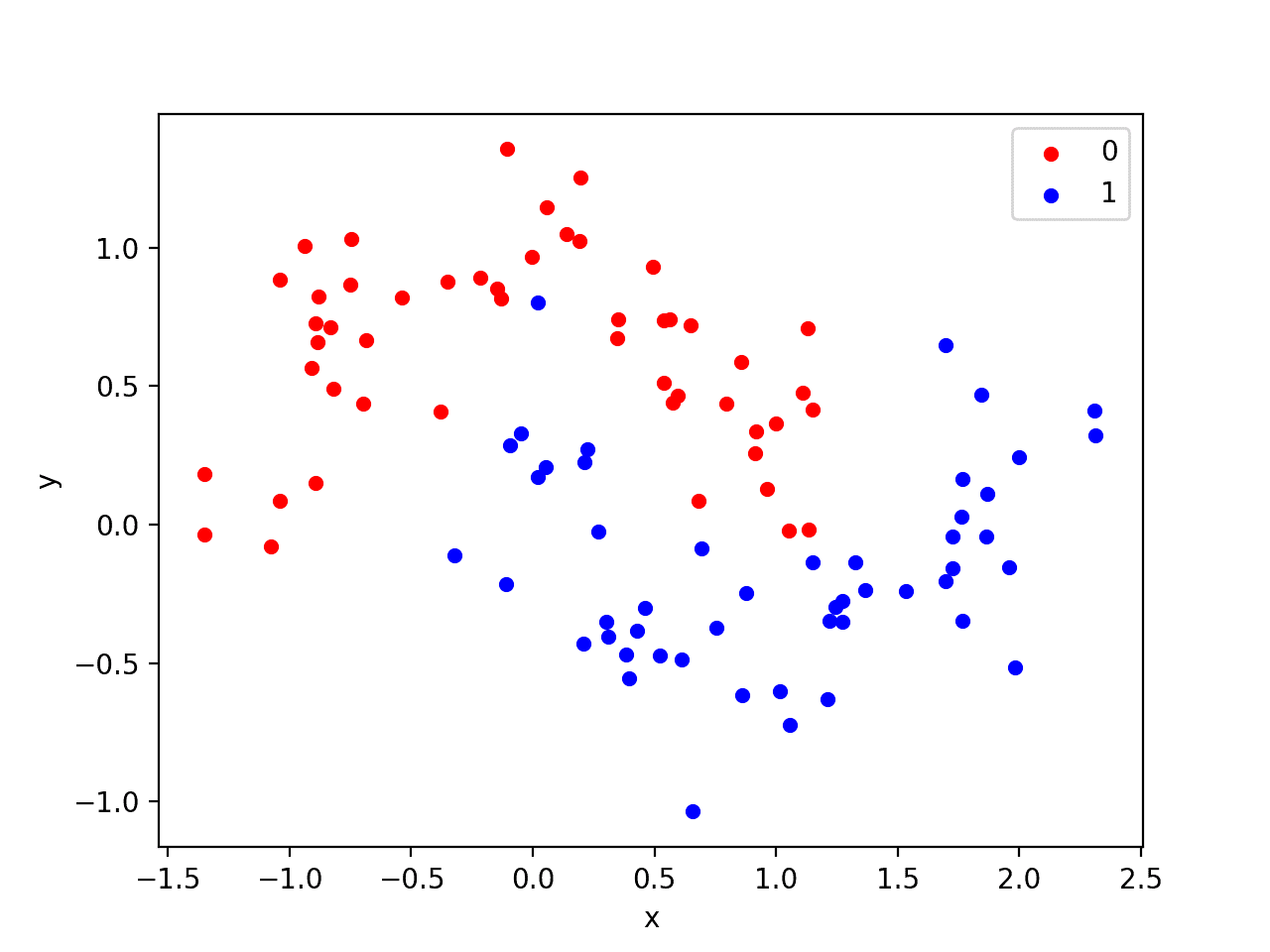

How To Use Weight Decay To Reduce Overfitting Of Neural Network In Keras

How To Use Weight Decay To Reduce Overfitting Of Neural Network In Keras

How To Use Weight Decay To Reduce Overfitting Of Neural Network In Keras

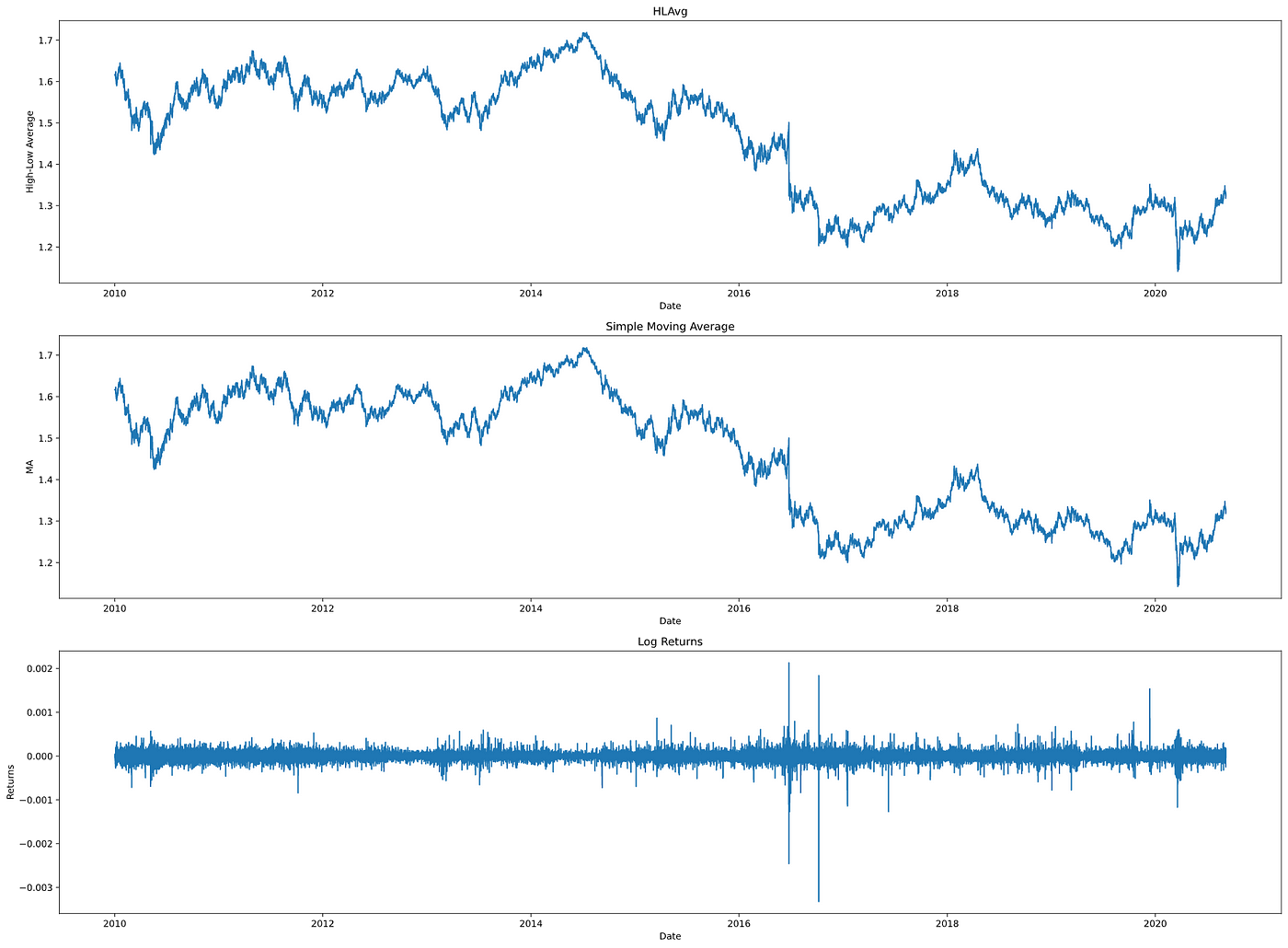

Pragmatic Deep Learning Model For Forex Forecasting Multi Step Prediction Adam Tibi Towards Ai

Github Rohanpillai20 Sgd For Linear Regression With L2 Regularization Code For Stochastic Gradient Descent For Linear Regression With L2 Regularization

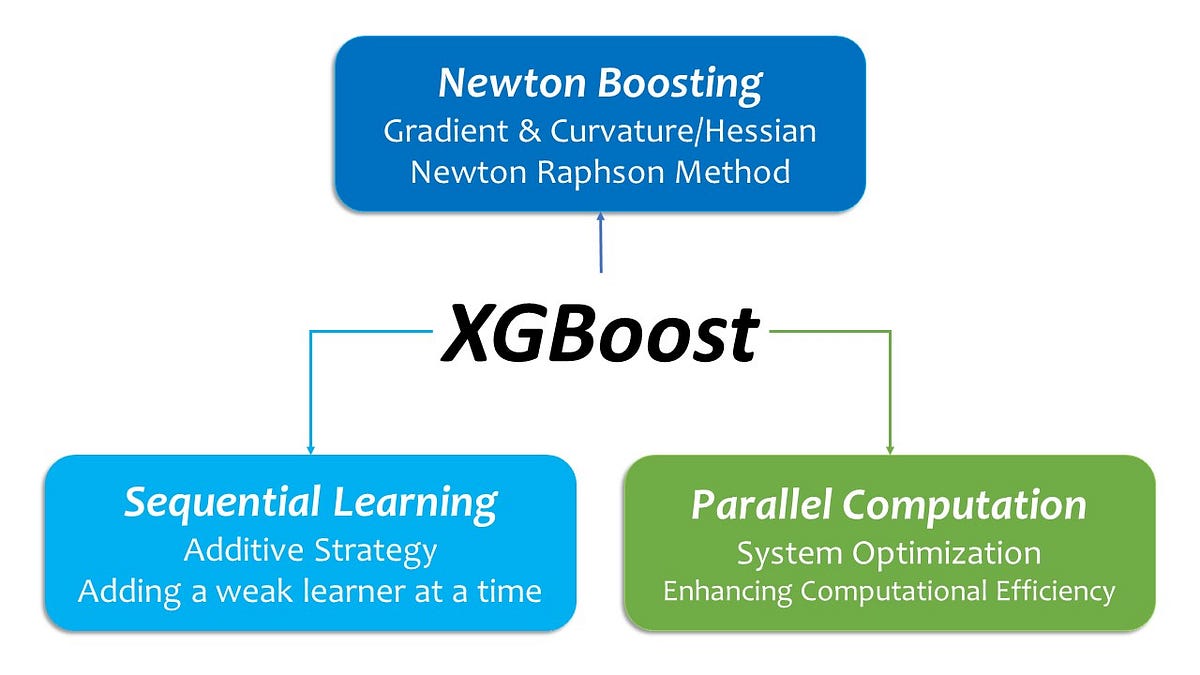

Xgboost Its Genealogy Its Architectural Features And Its Innovation By Michio Suginoo Oct 2022 Towards Data Science

Machine Learning Review Note Alex Tech Bolg的博客 Csdn博客

Fighting Overfitting With L1 Or L2 Regularization Which One Is Better Neptune Ai

Regularization In Machine Learning Regularization Example Machine Learning Tutorial Simplilearn Youtube

Xgboost In A Nutshell Xgboost Stands For Extreme Gradient By Joel Jorly Artificial Intelligence In Plain English

Machine Learning Tricks4better Performance Deep Learning Garden

A Gentle Introduction To Activation Regularization In Deep Learning

Machine Learning Mastery Jason Brownlee Machine Learning Mastery With Python 2016